This policy brief is based on BIS working paper No 1194. The views expressed in this policy brief are those of the authors and do not necessarily reflect those of the Bank for International Settlements.

Abstract

The drive to adopt artificial intelligence in finance hides a key truth: while better information processing has boosted efficiency, it has also brought more complex risks. AI has enhanced information processing, data analysis, pattern recognition and predictive power in the financial system. At the same time, it exacerbates data privacy concerns, the risk of algorithmic discrimination, market concentration and network interconnectedness. This policy brief examines the key opportunities and challenges posed by AI and proposes a framework for adapting regulatory approaches to its transformative effects in uncertain scenarios. The framework is built on fundamental principles of AI governance, including transparency, accountability, fairness, safety, and human oversight. Furthermore, it underscores the critical importance of international coordination to achieve coherent and effective oversight of AI in the global financial system.

The financial system functions like the brain of the economy, transforming large amounts of complex information into price signals that steer resource allocation. It supports economic health by managing risk, maintaining liquidity, ensuring stability, and facilitating the smooth flow of capital. Handling complex information and coordinating numerous agents in the economy is a challenging task and requires cutting-edge information processing technology. It is not surprising then that the financial sector is at the forefront of harnessing advances in artificial intelligence (AI).

Technology is not new for the financial sector. Throughout history, advancements in information processing—from basic bookkeeping to sophisticated AI algorithms—have continuously transformed the financial landscape. The advent of generative AI (GenAI) represents the latest frontier in this evolution. To understand the importance of the latest technological advancements for the financial sector, it is helpful to trace developments in information processing technology concurrently with those in finance.

AI refers to computer systems that perform tasks that typically require human intelligence and emerged from basic computation systems (Figure 1).1 Even early AI, based on if-then rules and symbolic representations, was already useful for basic financial functions. The most significant changes, however, came with machine learning (ML) models. ML algorithms can autonomously learn and perform tasks, for example classification and prediction, without explicitly spelling out the underlying rules. ML models were quickly adopted in finance, although their applications were limited by computing power. In the last 15 years, computing power has doubled every six months, leading to very rapid progress in the field of AI. Complemented by the availability of vast amounts of training data, better computing hardware has led to GenAI models, capable of generating data (text, video, images) themselves. Large language models (LLMs) like ChatGPT are a type of gen AI that specialises in processing and generating human language.

Each generation of AI has created new opportunities and challenges for the financial system. In financial intermediation, AI technologies have revolutionised the process of risk assessment and fraud detection, allowing for more accurate and efficient credit scoring and lending decisions. AI’s ability to analyse large datasets and identify patterns has enabled financial institutions to offer personalised financial services, improving customer satisfaction and financial inclusion. For example, AI algorithms can assess the creditworthiness of borrowers by analysing non-traditional data sources such as social media activity and online transaction history, providing financial access to underserved populations who may lack traditional credit histories.

Figure 1. Decoding AI. Source: Aldasoro et al (2024)

In the insurance sector, AI has streamlined underwriting processes and claims management. Advanced data analytics and machine learning models have enhanced risk modelling, allowing insurers to more accurately price premiums and manage risks. AI-driven automation has also reduced administrative costs and improved the overall efficiency of insurance operations. For instance, AI-powered chatbots can handle customer inquiries and process claims quickly, freeing up human agents to focus on more complex tasks. Additionally, AI can also use data from connected devices, such as wearables and smart home sensors, to provide real-time risk assessments and personalised insurance products.

Asset management and payments are other areas where AI has enabled significant transformations. AI-driven investment strategies and portfolio management tools can optimise returns by leveraging vast amounts of market data to identify trends and make informed investment decisions. In payments, AI is regularly used for fraud detection, anti-money laundering initiatives, as well as liquidity management. For instance, AI can analyse transaction patterns in real-time to detect fraudulent activities, reducing the incidence of payment fraud and enhancing the security of digital transactions. Overall, AI has allowed for the development of more secure and efficient payment systems.

For the specific case of GenAI and LLMs, two key aspects extend the opportunities for the financial sector further. First, AI before LLMs allowed for better processing of traditional financial data, but GenAI and LLMs can process new, often unstructured data like images, videos, audio, etc. This can be particularly useful for risk analysis, credit scoring, prediction and asset management. Second, LLMs enable machines to converse like humans, which can lead to significant improvements in back-end processing, customer support, robo-advising and regulatory compliance.

As the opportunities created by technology have grown, so have the risks and challenges. Some challenges concern individual financial institutions, and some affect the financial system as a whole. Among the former, one major concern is data privacy. The extensive use of personal data by AI systems raises significant privacy issues, as financial institutions collect and process vast amounts of sensitive information. Ensuring data privacy and protecting against data breaches are critical challenges that need to be addressed to maintain public trust in AI-driven financial services. Algorithmic bias is another significant risk. AI systems can inadvertently perpetuate or exacerbate existing biases, leading to discriminatory practices in financial services. For instance, biased algorithms in credit scoring can result in unfair lending decisions that disproportionately affect certain demographic groups. Using diverse training data and conducting regular audits of algorithms can help deal with the risk of algorithmic bias.

Other challenges relate to the financial system as a whole. AI models, the underlying datasets and computational resources are all concentrated in the hands of a few big technology companies. Market concentration can not only reduce competition and innovation in the financial sector, but it can also lead to concentrated third-party dependencies and exacerbate cyber risk. The reliance of financial institutions on only a few AI providers creates single points of failure that can have widespread repercussions. For example, a malfunction or cyberattack targeting AI systems could disrupt financial markets and institutions, leading to significant economic and financial instability.

The interconnectedness of the financial system combined with the expanding use of AI in financial services can also exacerbate systemic risks. Take for example trading algorithms. While the use of AI in trading has increased market efficiency, it has also introduced new risks related to market stability. High-frequency trading algorithms can execute trades in milliseconds, potentially amplifying market volatility and creating flash crashes. Another important aspect is the increasing risk of uniform and pro-cyclical predictions as financial institutions rely on the same models and the models themselves rely on the same underlying datasets. Compounding these challenges is the inherent lack of explainability of AI models, which might make it difficult to for regulators to spot market manipulation or systemic risk build up in time.

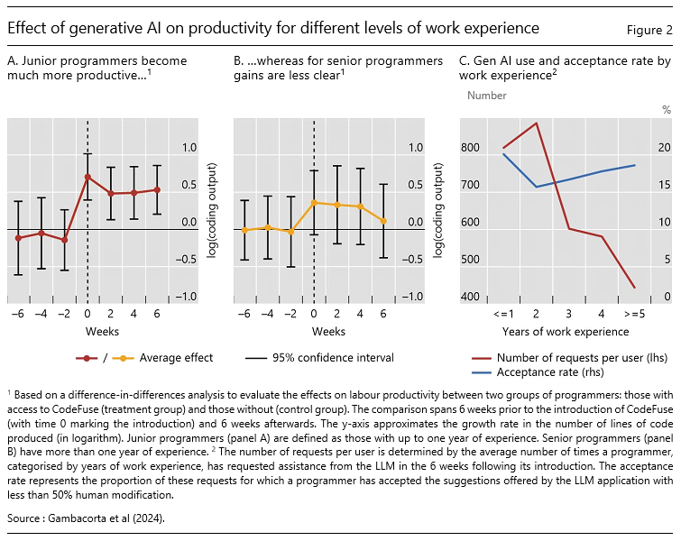

At the same time, AI adoption in the real sector is also going to be important for the financial system and systemic risk. There is substantial uncertainty about the medium and long-term effects of AI in the real economy. Early research shows the productivity enhancing effects of AI, particularly in tasks that require high cognitive skills and for workers that are less experienced. In the more optimistic scenario, AI will continue to drive innovation, efficiency, and productivity growth, creating new opportunities and enhancing economic development. In this case, the risk to the financial system arising from the real sector will be limited. But there could also be a more disruptive scenario in which technology progresses rapidly and there is large scale labour displacement and redistribution of wealth. The concentration of market power, algorithmic biases, cyber threats and data privacy issues could undermine public trust in the financial system and exacerbate social and economic disparities. In this case, AI use in the real sector can lead to widespread defaults and financial instability.

In any scenario, the risks posed by AI will extend the focus of financial regulation beyond traditional policy objectives. Beyond concerns like financial stability and market integrity, regulation will have to speak to new challenges of data privacy, algorithmic discrimination and geopolitical risks. Striking the right balance between leveraging AI’s benefits and managing its risks will require a comprehensive regulatory approach that addresses technological, societal, and ethical considerations. Of course, not all AI-related risks need regulatory intervention, as some can be managed through market mechanisms, particularly those not affecting broader objectives like financial stability or consumer protection. However, complex AI models may lead to unforeseen risks, making it difficult for regulators to respond quickly.

Uncertainty associated with technological progress highlights the need to have regulatory principles that align technological development with social and economic goals and reduce the risk of harm. Such principles would be based on transparency, accountability, fairness, safety, and human oversight. Transparent and accountable AI systems are essential to ensure that stakeholders understand how decisions are made and how liability should be assigned. For instance, financial institutions should provide clear explanations of their AI-driven decision-making processes, allow customers to challenge and correct any erroneous or biased decisions and put in place governance structures that assign clear roles and responsibilities for AI oversight. Financial institutions should also conduct regular audits and assessments of their AI systems to ensure compliance with regulatory requirements and ethical standards. To understand AI-driven systemic risks, regulators might want to stress-test different AI integration, homogenisation, and capability scenarios, to anticipate impacts on different markets and regulatory oversight ability.

Other key regulatory principles include fairness, safety, privacy protection and human oversight. Fairness in AI systems is crucial to prevent discriminatory practices and ensure equitable access to financial services. Ensuring the safety of AI systems involves robust cybersecurity measures and resilience against potential failures. This points towards the need for financial institutions to invest in advanced security technologies to protect their AI systems from cyber threats and data breaches. Human oversight is necessary to monitor and intervene in AI-driven processes, maintaining a balance between automation and human judgment. This would involve training and empowering financial professionals to understand and manage AI technologies, as well as establishing mechanisms for human review and intervention in AI-driven decision-making processes.

For effective AI regulation that balances innovation with potential harms, international coordination will be crucial. The cross-border nature of financial markets and the global reach of AI technologies require harmonised regulatory approaches to manage risks and ensure consistent standards. Collaborative efforts among regulatory bodies, financial institutions, and technology providers are essential to develop and harness effective AI. This includes working towards a common ground for AI ethics, transparency, and accountability, as well as promoting cross-border transfer of knowledge and best practices.

Aldasoro, I, L Gambacorta, A Korinek, V Shreeti and M Stein (2024): “Intelligent financial system: How AI is transforming finance”, BIS Working Papers, 1194.Gambacorta, L, H Qiu, D Rees and S Shian (2024): “Generative AI and labour productivity: A field experiment on code programming”, BIS Working Papers, 1208.

Norvig, P, and S Russell (2021): “Artificial intelligence: a modern approach, global edition”, Pearson, 4th edition.

Norvig and Russell, 2010.